OpenAI Assistants API v2 - AIShorts #3

A Brand new series where I break down AI, GenAI, and Agents one concept at a time. Whether it's daily or weekly, you get insights so you can keep up with the ever-evolving world of AI.

🚀 OpenAI Assistants API v2 -> Function Calling, File Search, Code Interpreter, and Production-Ready AI Assistants

This is the Advanced guide to building enterprise-grade AI assistants with OpenAI's latest API capabilities.

This guide walks through practical workflows for implementing the OpenAI Assistants API v2, with real examples and production-ready configurations. The focus is on scalable setups you can deploy immediately, with notes on optimization and monitoring.

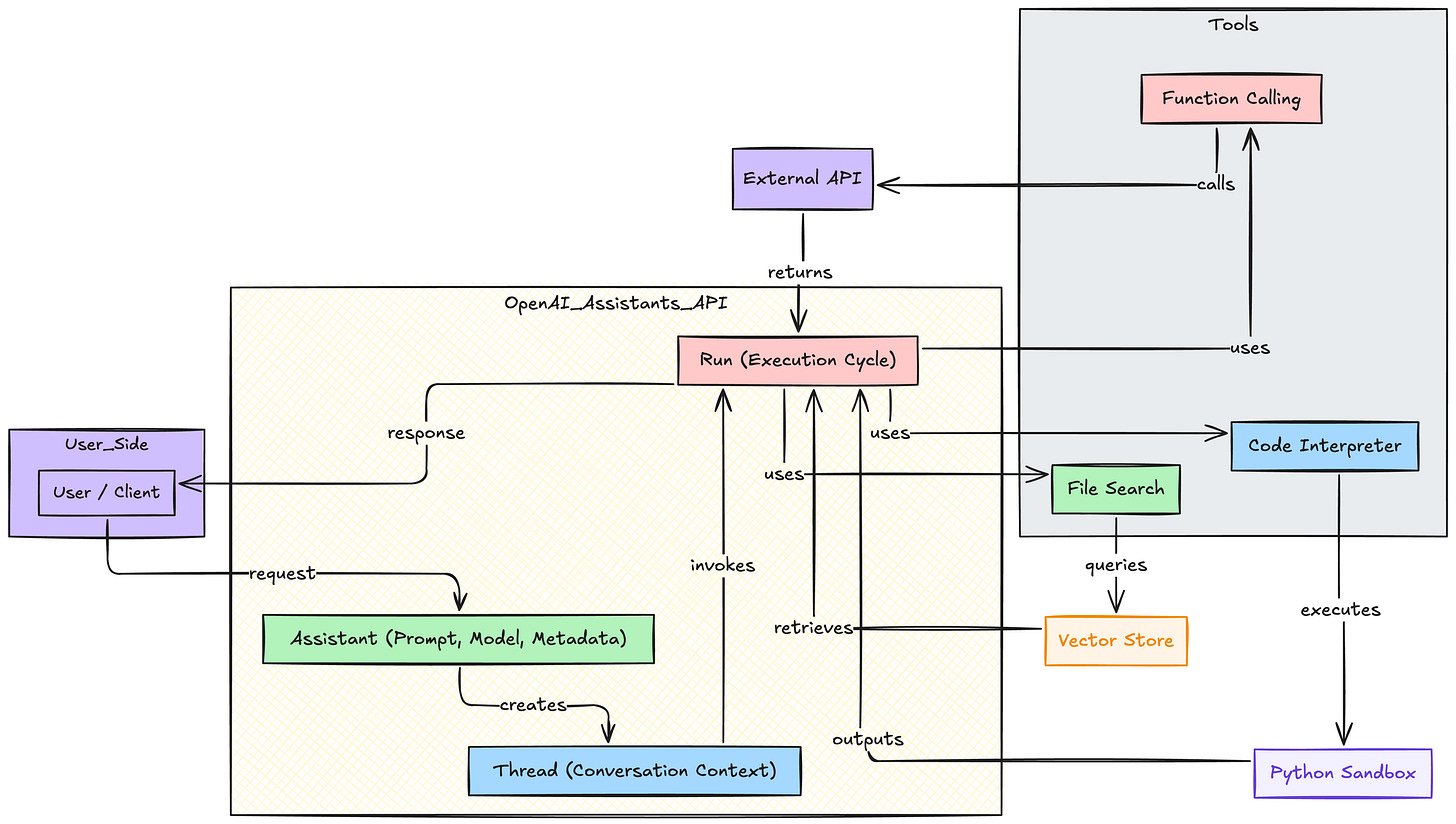

What is the OpenAI Assistants API?

The Assistants API is a higher-level abstraction built on top of the Chat Completions endpoint.

It introduces managed threads (stateful conversations), runs (execution cycles), and

first-class tools for function calling, file search, and a sandboxed code interpreter. In short, OpenAI hosts the agent framework so you can focus on business logic instead of building session storage, retrieval pipelines, and tool routers from scratch.

Why Use the Assistants API?

• Built-in State Management – Automatically persists multi-turn context and metadata.

• Unified Tooling Layer – One schema for function calls, code execution, and vector search.

• Enterprise Observability – Runs, files, and vector stores are surfaced in the OpenAI

dashboard for monitoring, quotas, and audits.

• Rapid Development – Higher-level primitives reduce boilerplate compared with Chat

Completions + custom glue code.

When Should You Choose the Assistants API?

Choose Assistants when you need any of the following:

Long-lived, multi-session assistants (e.g., customer support, internal help desks).

Rich tool orchestration that calls your own APIs or runs Python code.

Retrieval-augmented generation over large document sets via vector stores + file search.

Production metrics, quota enforcement, and compliance reporting handled by OpenAI.

If you only need a one-off response without external tool calls or persistent memory, the lighter chat.completions endpoint may be sufficient.

Table of Contents

Step-by-Step: Setting Up OpenAI Assistants for Production

1. Define Your Assistant Architecture

Core Components:

Assistant: The AI entity with instructions, tools, and model configuration

Threads: Conversation sessions that maintain context

Messages: Individual exchanges within threads

Runs: Execution instances that process messages and generate responses

Tools: Function calling, file search, and code interpreter capabilities

Key Architectural Decisions:

Model selection (GPT-4o, GPT-4o-mini for cost optimization)

Tool combinations and external integrations

Thread persistence and session management

Vector store configurations for file search

Function calling patterns for external APIs

2. Install and Configure OpenAI SDK

# Install the latest OpenAI Python SDK

pip install "openai>=1.12.0"

# For production environments

pip install "openai[standard]>=1.12.0" "fastapi>=0.104.0" "uvicorn[standard]>=0.24.0"

Production Configuration:

import openai

from openai import OpenAI

from openai.types.beta import Assistant, Thread, Run

from openai.types.beta.threads import Message, MessageContent

import logging

# Initialize OpenAI client with proper error handling

client = OpenAI(

api_key="your-openai-api-key", # Use environment variables in production

timeout=60.0, # Increased timeout for complex operations

max_retries=3

)

# Create a basic assistant with production settings

assistant = client.beta.assistants.create(

name="Production Assistant",

instructions="""You are a helpful assistant designed to help with business operations.

Always provide accurate, well-structured responses and use available tools when appropriate.

If you're unsure about something, ask clarifying questions.""",

model="gpt-4o", # Use GPT-4o for best performance

tools=[

{"type": "code_interpreter"},

{"type": "file_search"}

],

metadata={

"environment": "production",

"version": "1.0",

"created_by": "system"

}

)

print(f"Assistant created with ID: {assistant.id}")

Enhanced Error Handling:

import openai

from openai import OpenAI

from typing import Optional

import time

class ProductionAssistantManager:

def __init__(self, api_key: str):

self.client = OpenAI(api_key=api_key)

self.assistants = {}

def create_assistant_with_retry(self, config: dict, max_retries: int = 3) -> Optional[Assistant]:

"""Create assistant with exponential backoff retry logic."""

for attempt in range(max_retries):

try:

assistant = self.client.beta.assistants.create(**config)

self.assistants[assistant.id] = assistant

return assistant

except openai.APIConnectionError as e:

print(f"Connection error on attempt {attempt + 1}: {e}")

if attempt < max_retries - 1:

time.sleep(2 ** attempt)

continue

except openai.RateLimitError as e:

print(f"Rate limit exceeded on attempt {attempt + 1}: {e}")

if attempt < max_retries - 1:

time.sleep(60) # Wait 1 minute for rate limit reset

continue

except openai.APIStatusError as e:

print(f"API error on attempt {attempt + 1}: {e.status_code} - {e.response}")

return None

return None

3. Implement Thread Management and Conversations

Complete Thread Management System:

from typing import Dict, List, Optional

import asyncio

from datetime import datetime

class ThreadManager:

def __init__(self, client: OpenAI):

self.client = client

self.active_threads: Dict[str, Thread] = {}

def create_thread(self, user_id: str, metadata: Optional[Dict] = None) -> Thread:

"""Create a new conversation thread."""

thread_metadata = {

"user_id": user_id,

"created_at": datetime.utcnow().isoformat(),

**(metadata or {})

}

thread = self.client.beta.threads.create(metadata=thread_metadata)

self.active_threads[thread.id] = thread

return thread

def add_message(self, thread_id: str, content: str, role: str = "user") -> Message:

"""Add a message to an existing thread."""

message = self.client.beta.threads.messages.create(

thread_id=thread_id,

role=role,

content=content

)

return message

def run_assistant(self, thread_id: str, assistant_id: str,

instructions: Optional[str] = None) -> Run:

"""Execute assistant on a thread."""

run_params = {

"thread_id": thread_id,

"assistant_id": assistant_id

}

if instructions:

run_params["instructions"] = instructions

run = self.client.beta.threads.runs.create(**run_params)

return run

def wait_for_completion(self, thread_id: str, run_id: str,

timeout: int = 300) -> Run:

"""Wait for run completion with timeout."""

start_time = time.time()

while time.time() - start_time < timeout:

run = self.client.beta.threads.runs.retrieve(

thread_id=thread_id,

run_id=run_id

)

if run.status in ["completed", "failed", "cancelled", "expired"]:

return run

elif run.status == "requires_action":

# Handle function calls if needed

return self.handle_required_actions(thread_id, run)

time.sleep(1)

raise TimeoutError(f"Run {run_id} did not complete within {timeout} seconds")

def get_messages(self, thread_id: str, limit: int = 20) -> List[Message]:

"""Retrieve messages from a thread."""

messages = self.client.beta.threads.messages.list(

thread_id=thread_id,

limit=limit,

order="desc"

)

return list(messages.data)

# Usage

manager = ThreadManager(client)

thread = manager.create_thread("user_123", {"session": "web_chat"})

manager.add_message(thread.id, "What's the weather like today?")

run = manager.run_assistant(thread.id, assistant.id)

completed_run = manager.wait_for_completion(thread.id, run.id)

4. Configure Function Calling and External Integrations

Advanced Function Calling Setup:

import json

import requests

from typing import Any, Dict, List

# Define external functions for the assistant

def get_weather(location: str) -> Dict[str, Any]:

"""Get current weather for a location."""

# Mock weather API call

return {

"location": location,

"temperature": "22°C",

"condition": "Sunny",

"humidity": "65%"

}

def search_database(query: str, table: str = "users") -> List[Dict]:

"""Search internal database."""

# Mock database search

return [

{"id": 1, "name": "John Doe", "email": "john@example.com"},

{"id": 2, "name": "Jane Smith", "email": "jane@example.com"}

]

def send_notification(recipient: str, message: str, channel: str = "email") -> bool:

"""Send notification to user."""

# Mock notification service

print(f"Sending {channel} to {recipient}: {message}")

return True

# Enhanced AssistantManager with function handling

class EnhancedAssistantManager:

def __init__(self, client: OpenAI):

self.client = client

self.function_registry = {

"get_weather": get_weather,

"search_database": search_database,

"send_notification": send_notification

}

def create_function_assistant(self) -> Assistant:

"""Create assistant with function calling capabilities."""

return self.client.beta.assistants.create(

name="Function Calling Assistant",

instructions="You can call functions to get real-time data and perform actions.",

model="gpt-4o",

tools=[

{

"type": "function",

"function": {

"name": "get_weather",

"description": "Get current weather information",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "City or location name"

}

},

"required": ["location"]

}

}

},

{

"type": "function",

"function": {

"name": "search_database",

"description": "Search internal database for records",

"parameters": {

"type": "object",

"properties": {

"query": {"type": "string", "description": "Search query"},

"table": {"type": "string", "description": "Database table to search"}

},

"required": ["query"]

}

}

}

]

)

def handle_function_calls(self, thread_id: str, run: Run) -> Run:

"""Handle function calls during run execution."""

if run.required_action and run.required_action.type == "submit_tool_outputs":

tool_outputs = []

for tool_call in run.required_action.submit_tool_outputs.tool_calls:

function_name = tool_call.function.name

function_args = json.loads(tool_call.function.arguments)

if function_name in self.function_registry:

try:

result = self.function_registry[function_name](**function_args)

tool_outputs.append({

"tool_call_id": tool_call.id,

"output": json.dumps(result)

})

except Exception as e:

tool_outputs.append({

"tool_call_id": tool_call.id,

"output": f"Error: {str(e)}"

})

# Submit tool outputs

return self.client.beta.threads.runs.submit_tool_outputs(

thread_id=thread_id,

run_id=run.id,

tool_outputs=tool_outputs

)

return run

5. Implement File Search and Document Processing

Production File Search Implementation:

import os

from pathlib import Path

from typing import List, Union

class DocumentProcessor:

def __init__(self, client: OpenAI):

self.client = client

self.vector_stores = {}

def create_vector_store(self, name: str, expires_after_days: int = 7) -> str:

"""Create a vector store for document search."""

vector_store = self.client.beta.vector_stores.create(

name=name,

expires_after={

"anchor": "last_active_at",

"days": expires_after_days

}

)

self.vector_stores[name] = vector_store.id

return vector_store.id

def upload_documents(self, vector_store_id: str,

file_paths: List[Union[str, Path]]) -> List[str]:

"""Upload multiple documents to vector store."""

file_ids = []

for file_path in file_paths:

try:

with open(file_path, "rb") as file:

uploaded_file = self.client.files.create(

file=file,

purpose="assistants"

)

file_ids.append(uploaded_file.id)

print(f"Uploaded {file_path}: {uploaded_file.id}")

except Exception as e:

print(f"Failed to upload {file_path}: {e}")

# Add files to vector store

if file_ids:

self.client.beta.vector_stores.file_batches.create(

vector_store_id=vector_store_id,

file_ids=file_ids

)

return file_ids

def create_search_assistant(self, vector_store_id: str) -> Assistant:

"""Create assistant with file search capabilities."""

return self.client.beta.assistants.create(

name="Document Search Assistant",

instructions="""You are a document search assistant. Use the file search tool to find

relevant information from uploaded documents. Always cite your sources and provide

specific references when possible.""",

model="gpt-4o",

tools=[{"type": "file_search"}],

tool_resources={

"file_search": {

"vector_store_ids": [vector_store_id]

}

}

)

# Usage example

doc_processor = DocumentProcessor(client)

# Create vector store for company documents

vector_store_id = doc_processor.create_vector_store("company_docs", expires_after_days=30)

# Upload documents

documents = ["policy.pdf", "handbook.pdf", "procedures.docx"]

file_ids = doc_processor.upload_documents(vector_store_id, documents)

# Update assistant

search_assistant = doc_processor.create_search_assistant(vector_store_id)

6. Set Up Code Interpreter and Data Analysis

Advanced Code Interpreter Configuration:

import pandas as pd

import matplotlib.pyplot as plt

from io import BytesIO

import base64

class DataAnalysisAssistant:

def __init__(self, client: OpenAI):

self.client = client

def create_analyst_assistant(self) -> Assistant:

"""Create assistant with code interpreter for data analysis."""

return self.client.beta.assistants.create(

name="Data Analysis Assistant",

instructions="""You are a data analysis expert. Use the code interpreter to:

1. Load and analyze datasets

2. Create visualizations and charts

3. Perform statistical analysis

4. Generate insights and recommendations

Always explain your analysis process and provide clear interpretations of results.""",

model="gpt-4o",

tools=[{"type": "code_interpreter"}]

)

def upload_dataset(self, file_path: str) -> str:

"""Upload dataset for analysis."""

with open(file_path, "rb") as file:

uploaded_file = self.client.files.create(

file=file,

purpose="assistants"

)

return uploaded_file.id

def analyze_data(self, assistant_id: str, file_id: str, analysis_request: str) -> Dict:

"""Perform data analysis with uploaded dataset."""

# Create thread for analysis

thread = self.client.beta.threads.create()

# Add dataset and analysis request

message = self.client.beta.threads.messages.create(

thread_id=thread.id,

role="user",

content=analysis_request,

attachments=[{

"file_id": file_id,

"tools": [{"type": "code_interpreter"}]

}]

)

# Run analysis

run = self.client.beta.threads.runs.create(

thread_id=thread.id,

assistant_id=assistant_id

)

# Wait for completion and return results

completed_run = self.wait_for_completion(thread.id, run.id)

messages = self.client.beta.threads.messages.list(thread_id=thread.id)

return {

"thread_id": thread.id,

"run_id": run.id,

"status": completed_run.status,

"results": [msg.content for msg in messages.data if msg.role == "assistant"]

}

def wait_for_completion(self, thread_id: str, run_id: str) -> Run:

"""Wait for analysis completion."""

while True:

run = self.client.beta.threads.runs.retrieve(

thread_id=thread_id,

run_id=run_id

)

if run.status in ["completed", "failed", "cancelled"]:

return run

time.sleep(2)

# Usage

analyst = DataAnalysisAssistant(client)

assistant = analyst.create_analyst_assistant()

# Upload and analyze dataset

file_id = analyst.upload_dataset("sales_data.csv")

results = analyst.analyze_data(

assistant.id,

file_id,

"Please analyze this sales dataset and create visualizations showing trends and insights."

)

7. Production Deployment and Scaling

Complete Production Setup:

from fastapi import FastAPI, HTTPException, Depends

from fastapi.middleware.cors import CORSMiddleware

from pydantic import BaseModel

import logging

import redis

import json

from typing import Optional

# Configure logging

logging.basicConfig(

level=logging.INFO,

format='%(asctime)s - %(name)s - %(levelname)s - %(message)s'

)

logger = logging.getLogger(__name__)

app = FastAPI(title="OpenAI Assistants API", version="1.0.0")

# CORS middleware

app.add_middleware(

CORSMiddleware,

allow_origins=["*"], # Configure appropriately for production

allow_methods=["*"],

allow_headers=["*"],

)

# Assistant configurations

ASSISTANT_CONFIGS = {

"customer_support": {

"name": "Customer Support Assistant",

"instructions": "Help customers with their inquiries professionally and efficiently.",

"model": "gpt-4o-mini", # Use mini for cost optimization

"tools": [{"type": "function", "function": {...}}]

},

"data_analyst": {

"name": "Data Analysis Assistant",

"instructions": "Analyze data and provide insights using code interpreter.",

"model": "gpt-4o",

"tools": [{"type": "code_interpreter"}]

}

}

class ProductionAssistantService:

def __init__(self):

self.client = OpenAI()

self.redis_client = redis.Redis(host='localhost', port=6379, db=0)

self.assistants = {}

self.initialize_assistants()

def initialize_assistants(self):

"""Initialize all assistant configurations."""

for assistant_type, config in ASSISTANT_CONFIGS.items():

try:

assistant = self.client.beta.assistants.create(**config)

self.assistants[assistant_type] = assistant.id

logger.info(f"Initialized {assistant_type} assistant: {assistant.id}")

except Exception as e:

logger.error(f"Failed to initialize {assistant_type}: {e}")

# Request/Response models

class ChatRequest(BaseModel):

message: str

assistant_type: str = "customer_support"

thread_id: Optional[str] = None

user_id: str

class ChatResponse(BaseModel):

response: str

thread_id: str

assistant_id: str

run_id: str

# Global service instance

assistant_service = ProductionAssistantService()

@app.post("/chat", response_model=ChatResponse)

async def chat(request: ChatRequest):

"""Main chat endpoint for assistant interactions."""

try:

# Get or create thread

if request.thread_id:

thread_id = request.thread_id

else:

thread = assistant_service.client.beta.threads.create(

metadata={"user_id": request.user_id}

)

thread_id = thread.id

# Add user message

assistant_service.client.beta.threads.messages.create(

thread_id=thread_id,

role="user",

content=request.message

)

# Get assistant ID

assistant_id = assistant_service.assistants.get(request.assistant_type)

if not assistant_id:

raise HTTPException(status_code=400, detail="Invalid assistant type")

# Run assistant

run = assistant_service.client.beta.threads.runs.create(

thread_id=thread_id,

assistant_id=assistant_id

)

# Wait for completion (with streaming support)

completed_run = await wait_for_run_completion(thread_id, run.id)

# Get response

messages = assistant_service.client.beta.threads.messages.list(

thread_id=thread_id,

limit=1,

order="desc"

)

response_content = messages.data[0].content[0].text.value

return ChatResponse(

response=response_content,

thread_id=thread_id,

assistant_id=assistant_id,

run_id=run.id

)

except Exception as e:

logger.error(f"Chat error: {e}")

raise HTTPException(status_code=500, detail=str(e))

async def wait_for_run_completion(thread_id: str, run_id: str) -> Run:

"""Async wait for run completion with function call handling."""

max_iterations = 30

iteration = 0

while iteration < max_iterations:

run = assistant_service.client.beta.threads.runs.retrieve(

thread_id=thread_id,

run_id=run_id

)

if run.status == "completed":

return run

elif run.status in ["failed", "cancelled", "expired"]:

raise Exception(f"Run failed with status: {run.status}")

elif run.status == "requires_action":

# Handle function calls

run = await handle_function_calls(thread_id, run)

await asyncio.sleep(1)

iteration += 1

raise Exception("Run timed out")

@app.get("/health")

async def health_check():

"""Health check endpoint."""

return {"status": "healthy", "assistants": len(assistant_service.assistants)}

if __name__ == "__main__":

import uvicorn

uvicorn.run(app, host="0.0.0.0", port=8000)

Kubernetes Deployment:

# k8s-assistants-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: openai-assistants-api

labels:

app: assistants-api

spec:

replicas: 3

selector:

matchLabels:

app: assistants-api

template:

metadata:

labels:

app: assistants-api

spec:

containers:

- name: assistants-api

image: your-registry/assistants-api:latest

ports:

- containerPort: 8000

env:

- name: OPENAI_API_KEY

valueFrom:

secretKeyRef:

name: openai-secret

key: api-key

- name: REDIS_URL

value: "redis://redis-service:6379"

resources:

requests:

memory: "512Mi"

cpu: "250m"

limits:

memory: "1Gi"

cpu: "500m"

livenessProbe:

httpGet:

path: /health

port: 8000

initialDelaySeconds: 30

periodSeconds: 10

readinessProbe:

httpGet:

path: /health

port: 8000

initialDelaySeconds: 5

periodSeconds: 5

---

apiVersion: v1

kind: Service

metadata:

name: assistants-api-service

spec:

selector:

app: assistants-api

ports:

- protocol: TCP

port: 80

targetPort: 8000

type: LoadBalancer

OpenAI Assistants API v2: Production-Ready AI Assistants

The OpenAI Assistants API v2 represents a significant advancement in building sophisticated AI applications. With built-in function calling, file search capabilities, and code interpreter functionality, it enables developers to create powerful, context-aware assistants that can handle complex workflows.

Key Production Benefits:

Persistent Threads: Maintain conversation context across sessions

Advanced Tool Integration: Seamlessly combine multiple AI capabilities

Enterprise-Grade Scaling: Handle thousands of concurrent conversations

Cost Optimization: Use GPT-4o-mini for standard tasks, GPT-4o for complex analysis

Built-in Streaming: Real-time response delivery for better UX

Industry Applications:

Customer support automation with function calling

Document analysis and search for legal/compliance teams

Data analysis and visualization for business intelligence

Code review and development assistance

Interactive educational and training platforms

Related Resources: